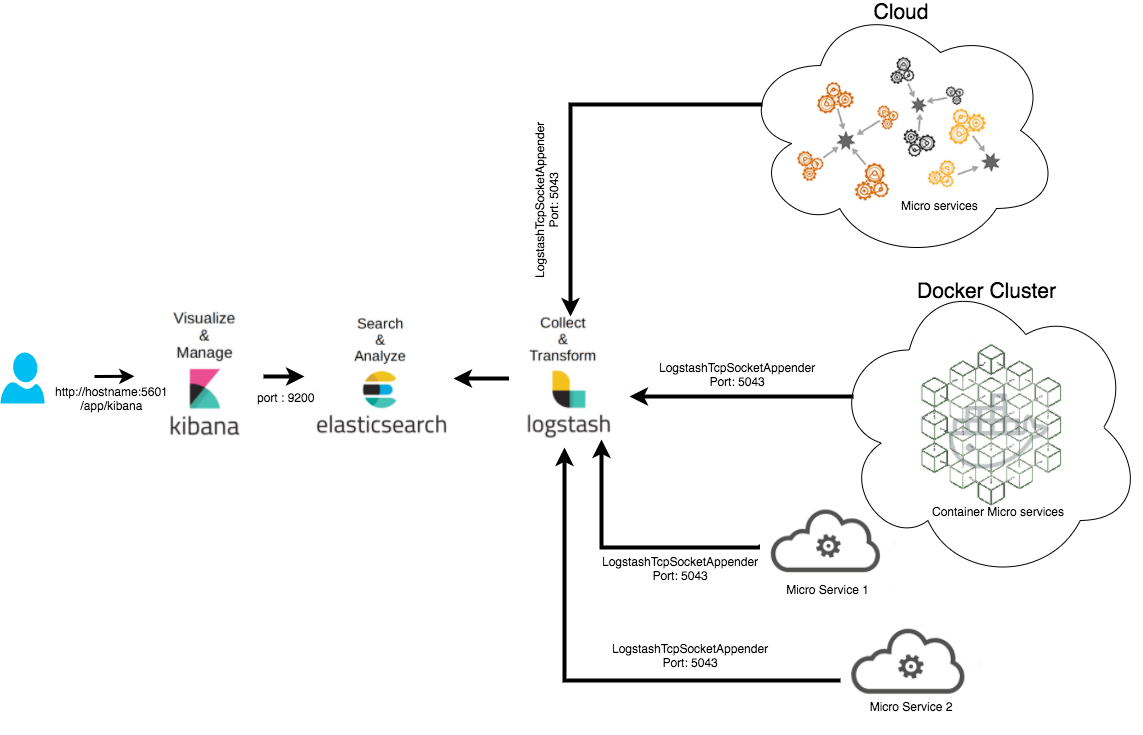

Centralized Log Aggregation & Visualization using ELK Stack for Micro service Architecture with Spring Cloud for Centralized Configuration

Log aggregation, visualization, monitoring and analysis of micro services in cloud using ELK Stack (Elastic Search, Logstash and Kibana)

Introduction

Storing logs from different micro services to a trustable storage on a timely basis, easy retrieval of stored logs and analysis of logs for diagnosis purpose are very important things for any cloud based micro service architecture. There are lots of option available for log aggregation in a microservice based such environment. This article gives a working example of log aggregation using ELK stack in a Spring Boot based microservice implementation where Spring Cloud is used for configuration storage. The logging library used is ch.qos.logback and LogstashTcpSocketAppender is used for directly logging messages to elastic search (which stores the logs) using logstash and finally you can view and analyze all the logs using Kibana.

ELK Installation & Configurations

In this article, Ubuntu 16.04 is used for explaining all the installation steps.

1. Elastic Search

Elasticsearch is a highly scalable open-source full-text search and analytics engine. It allows you to store, search, and analyze big volumes of data quickly and in near real time. It is generally used as the underlying engine/technology that powers applications that have complex search features and requirements.

Installation

Download the required package for your Operating System from the Elastic Website.

Here for Ubuntu, I am downloading debian package.

Then install the package using

$ sudo dpkg –i elasticsearch-5.6.3.deb

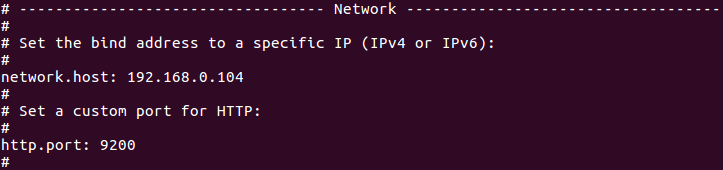

The default installation directory of elastic search is /usr/share/elasticsearch. Elasticsearch configuration file can be located at /etc/elasticsearch/elasticsearch.yml. You need to modify http.port and network.host properties to a valid IP address and port number for accessing from outside network. Below is my configuration for network in yml.

# ---------------------------------- Network -----------------------------------

#

# Set the bind address to a specific IP (IPv4 or IPv6):

#

network.host: 192.168.0.104

#

# Set a custom port for HTTP:

#

http.port: 9200

#

# For more information, consult the network module documentation.

Start the elastic search service using

$ sudo service elasticsearch start

Verification

You can check the service status using

$ sudo service elasticsearch status

If the service is running, then things are fine.

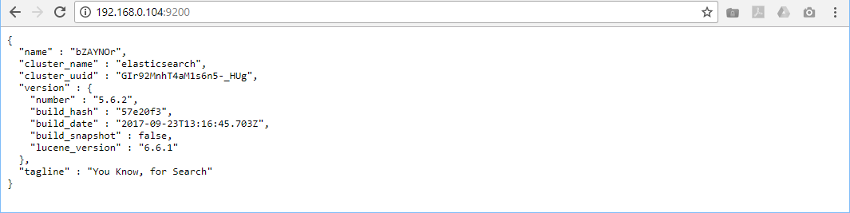

Now you can test elastic search by entering http://192.168.0.104:9200

2. Kibana

Kibana is an open source analytics and visualization platform designed to work with Elasticsearch. You use Kibana to search, view, and interact with data stored in Elasticsearch indices. You can easily perform advanced data analysis and visualize your data in a variety of charts, tables, and maps.

Kibana makes it easy to understand large volumes of data. Its simple, browser-based interface enables you to quickly create and share dynamic dashboards that display changes to Elasticsearch queries in real time.

Installation

Download the required package for your Operating System from the Elastic Website.

Here for Ubuntu, I am downloading debian package.

Then install the package using

$ sudo dpkg –i kibana-5.6.3-amd64.deb

The default installation directory of kibana is /usr/share/kibana. Kibana configuration file can be located at /etc/kibana/kibana.yml. You need to modify server.port and server.host properties to a valid IP address and port number for accessing from outside network. Below is my configuration for port and host in yml.

# Kibana is served by a back end server. This setting specifies the port to use.

server.port: 5601

# Specifies the address to which the Kibana server will bind. IP addresses and host names are both valid values.

# The default is 'localhost', which usually means remote machines will not be able to connect.

# To allow connections from remote users, set this parameter to a non-loopback address.

server.host: "192.168.0.104"

Starting Kibana Service

$ sudo service kibana start

Verification

You can check the service status using

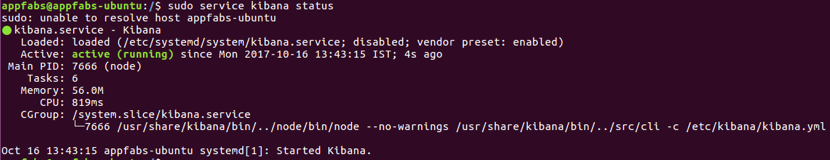

$ sudo service kibana status

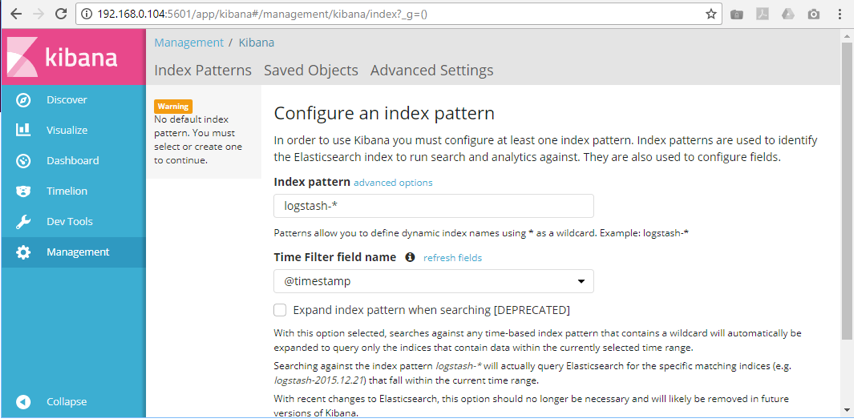

Now pointed to your web-browser and type the address http://192.168.0.104:5601 will bring kibana web interface in front of you.

3. Logstash

Logstash is an open source data collection engine with real-time pipelining capabilities. Logstash can dynamically unify data from disparate sources and normalize the data into destinations of your choice. Cleanse and democratize all your data for diverse advanced downstream analytics and visualization use cases.

Installation

Download the required package for your Operating System from the Elastic Website.

Then install the package using

$ sudo dpkg –i logstash-5.6.3.deb

Now the package is installed to /usr/share/logstash. Logstash configurations are available at /etc/logstash/. You can edit logstash.yml file and can enter a valid ip address in http.host property for remote access. Below is my configuration for host in yml.

# ------------ Metrics Settings --------------

#

# Bind address for the metrics REST endpoint

#

http.host: "192.168.0.104"

#

Logstash configuration

Create a configuration file named appfabs-log-configuration.conf at location /usr/share/Logstash/ with the below entries.

input {

tcp {

port => 5043

codec => json_lines

}

}

filter {

}

output {

stdout {

codec => rubydebug

}

elasticsearch {

hosts=>"192.168.0.104:9200"

index=>"appfabs-logback"

}

}

Here the input configuration tcp with port 5043 will receive logs from different micro services and redirect it to output configurations. Here the logs will be printed in console as stdout is configured. Also the same logs will be redirected to elasticsearch with given host address and index for storing the logs in elastic search. This index will be used for creating index pattern in kibana.

Running logstash

In the terminal, goto /usr/share/Logstash. Enter the below command for running the configuration created above.

$ bin/logstash –f appshield-log-configuration.conf

If everything is fine, the Logstash will be listen for taking log inputs

Coding for Generating Logs

In the source code side we required two main parts.

A centralized configuration store using Spring Cloud

Microservices which fetch configurations from the centralized store, generate logs based on configuration and finally sends those logs to logstash for storing it in Elastic Search.

1. A centralized configuration store using Spring Cloud

It again requires two parts.

- A git repository for configuration storage

- A microservice server application for fetching those repository configuration and make it available for all the micro services (consumers).

Git Repository

The below is a git repository created for storing configurations for this article.

https://github.com/appfabs/appfabs.sample.springcloud-config-repo.git

The master branch contains a properties file named appfabs-logaggregator-config-dev.properties for the dev profile. Its content looks like as shown below

#Tomcat Port Configuration

spring-customer-service.server.port =8001

spring-supplier-service.server.port =8002

#Logger configuration

logging.spring-customer-service.file =spring-customer-service.log

logging.spring-supplier-service.file =spring-supplier-service.log

logging.filesize.max =100MB

logging.archivesize.max =10GB

logging.history.max =60

logging.dev.loglevel =DEBUG

logging.staging.loglevel =INFO

logging.production.loglevel =INFO

These properties can be accessible from spring client applications directly using spring properties as shown below.

@Value("${logging.spring-customer-service.file}")

private String loggingFileName;

Configuration Server

The below git repository contains three microservices. The microservice named spring-cloudconfig is a spring cloud config server application (Spring Boot) which uses the above configuration git repository for getting configuration.

https://github.com/appfabs/appfabs.samples.elk.logaggregation

POM Dependency for spring cloud configuration server.

<dependencies>

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-config-server</artifactId>

<version>1.1.2.RELEASE</version>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-security</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<scope>test</scope>

</dependency>

</dependencies>

application.properties file for the server

server.port=8000

spring.cloud.config.server.git.uri=https://github.com/appfabs/appfabs.sample.springcloud-config-repo.git

spring.cloud.config.server.git.clone-on-start=true

security.user.name=appfabs

security.user.password=A99Fab5

Running the server

Since it is a maven project, using maven install will generates packages in the packages folder under the project root. This application is a Spring Boot application and so, you can run it directly using java -jar spring-cloudconfig-0.1.jar.

Now the server can be accessible using url http://localhost:8000/

2. Microservices

We have two microservices available in the respository

- spring-customer-service

- spring-supplier-service

Both are Spring Boot applications and directly connect to the above spring cloud config server application when loading. These micro services using bootstrap.properties file for that purpose.

Content of bootstrap.properties file.

spring.application.name=appfabs-logaggregator-config

spring.profiles.active=dev

spring.cloud.config.uri=http://localhost:8000

spring.cloud.config.username=appfabs

spring.cloud.config.password=A99Fab5

Source for logging

Here the class ch.qos.logback.classic.Logger is used for logging purpose. Also the spring cloud configuration property logging.spring-customer-service.file is accessed here just for demonstration purpose.

package org.appfabs.sample.logaggregation.customer.service;

import org.slf4j.LoggerFactory;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.boot.CommandLineRunner;

import org.springframework.stereotype.Component;

import ch.qos.logback.classic.Logger;

@Component

public class CustomerService implements CommandLineRunner{

private Logger logger = (Logger)LoggerFactory.getLogger(CustomerService.class);

/**

* Access spring cloud config variable using property. Sample usage.

*/

@Value("${logging.spring-customer-service.file}")

private String loggingFileName;

public void run(String... arg0) throws Exception {

int i = 0;

while (true) {

if (i++ > 500) {

break;

}

logger.debug("debug message slf4j");

logger.info("info message slf4j");

logger.warn("warn message slf4j");

logger.error("error message slf4j - log file name is {}", loggingFileName);

Thread.sleep(1000);

}

}

}

Logback Configuration

This is logback-spring.xml configuration file for logging to different sources including logstash. You can see that the configuration properties available in the spring cloud is accessed here for logback configuration purpose.

<?xml version="1.0" encoding="UTF-8"?>

<configuration>

<springProperty scope="context" name="logfiledirectory" source="logging.directory" defaultValue="/var/log"/>

<springProperty scope="context" name="logfile" source="logging.spring-customer-service.file" defaultValue="spring-customer-service.log"/>

<springProperty scope="context" name="logstashhost" source="logging.logstash.host" defaultValue="localhost:5043"/>

<springProperty scope="context" name="maxFileSize" source="logging.filesize.max" defaultValue="100MB"/>

<springProperty scope="context" name="maxArchiveSize" source="logging.archivesize.max" defaultValue="10GB"/>

<springProperty scope="context" name="maxHistory" source="logging.history.max" defaultValue="60"/>

<springProperty scope="context" name="devLogLevel" source="logging.dev.loglevel" defaultValue="DEBUG"/>

<springProperty scope="context" name="stagingLogLevel" source="logging.staging.loglevel" defaultValue="DEBUG"/>

<springProperty scope="context" name="productionLogLevel" source="logging.production.loglevel" defaultValue="INFO"/>

<appender name="logStashAppender"

class="net.logstash.logback.appender.LogstashTcpSocketAppender">

<destination>${logstashhost}</destination>

<!-- encoder is required -->

<encoder class="net.logstash.logback.encoder.LogstashEncoder" />

</appender>

<appender name="consoleOut" class="ch.qos.logback.core.ConsoleAppender">

<!-- encoders are assigned the type ch.qos.logback.classic.encoder.PatternLayoutEncoder

by default -->

<encoder>

<pattern>%d{HH:mm:ss.SSS} [%thread] %-5level %logger{36} - %msg%n</pattern>

</encoder>

</appender>

<appender name="rolling"

class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>${logfiledirectory}/${logfile}</file>

<rollingPolicy class="ch.qos.logback.core.rolling.SizeAndTimeBasedRollingPolicy">

<!-- rollover daily -->

<fileNamePattern>${logfile}-%d{yyyy-MM-dd}.%i.log</fileNamePattern>

<!-- each file should be at most 100MB, keep 60 days worth of history, but at most 20GB -->

<maxFileSize>${maxFileSize}</maxFileSize>

<maxHistory>${maxHistory}</maxHistory>

<totalSizeCap>${maxArchiveSize}</totalSizeCap>

</rollingPolicy>

<!-- <encoder class="net.logstash.logback.encoder.LogstashEncoder" /> -->

<encoder>

<pattern>%d{dd-MMM-yy HH:mm:ss.SSS} %-5level [%thread] %logger{10} [%file:%line] %msg%n</pattern>

</encoder>

</appender>

<springProfile name="dev">

<root level="${devLogLevel}">

<appender-ref ref="logStashAppender" />

</root>

<logger name="org" level="${devLogLevel}">

<appender-ref ref="consoleOut" />

</logger>

<logger name="org.appfabs.sample.logaggregation" level="${devLogLevel}">

<appender-ref ref="rolling" />

</logger>

</springProfile>

<springProfile name="staging">

<root level="${stagingLogLevel}">

<appender-ref ref="logStashAppender" />

</root>

<logger name="org" level="${stagingLogLevel}">

<appender-ref ref="consoleOut" />

</logger>

<logger name="org.appfabs.sample.logaggregation" level="${stagingLogLevel}">

<appender-ref ref="rolling" />

</logger>

</springProfile>

<springProfile name="production">

<root level="${productionLogLevel}">

<appender-ref ref="logStashAppender" />

</root>

<logger name="org" level="${productionLogLevel}">

<appender-ref ref="consoleOut" />

</logger>

<logger name="org.appfabs.sample.logaggregation" level="${productionLogLevel}">

<appender-ref ref="rolling" />

</logger>

</springProfile>

</configuration>

This configuration is output logs to three different destinations

LogstashTcpSocketAppender

This will append logs directly to TCP Socket with destination 192.168.0.104:5043 configured in application.properties file. If the logstash is running in the target machine, then this will print JSON output in logstash console on running the micro services. Sample json output shown

{

"logfiledirectory" => "/var/log",

"level" => "WARN",

"logfile" => "spring-customer-service.log",

"maxHistory" => "60",

"logstashhost" => "192.168.0.104:5043",

"maxFileSize" => "100MB",

"message" => "warn message slf4j",

"maxArchiveSize" => "10GB",

"@timestamp" => 2017-10-16T17:17:14.102Z,

"port" => 15646,

"thread_name" => "main",

"level_value" => 30000,

"productionLogLevel" => "INFO",

"@version" => 1,

"host" => "192.168.0.101",

"stagingLogLevel" => "INFO",

"logger_name" => "org.appfabs.sample.logaggregation.customer.service.CustomerService",

"devLogLevel" => "DEBUG"

}

ConsoleAppender

This will print logs to standard output.

RollingFileAppender

This will output logs to file. The target location configured is /var/log/spring-customer-service.log and /var/log/spring-supplier-service.log

POM Dependency for the micro services

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>appfabs.sample.logaggregation</groupId>

<artifactId>spring-customer-service</artifactId>

<version>0.1</version>

<packaging>jar</packaging>

<name>spring-customer-service</name>

<url>http://maven.apache.org</url>

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

</properties>

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>1.5.4.RELEASE</version>

<relativePath /> <!-- lookup parent from repository -->

</parent>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-hateoas</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-jdbc</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-security</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.postgresql</groupId>

<artifactId>postgresql</artifactId>

<scope>runtime</scope>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

<dependency>

<groupId>com.h2database</groupId>

<artifactId>h2</artifactId>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-config</artifactId>

<version>1.3.3.RELEASE</version>

</dependency>

<!-- https://mvnrepository.com/artifact/commons-logging/commons-logging -->

<dependency>

<groupId>commons-logging</groupId>

<artifactId>commons-logging</artifactId>

<version>1.1.1</version>

</dependency>

<dependency>

<groupId>net.logstash.logback</groupId>

<artifactId>logstash-logback-encoder</artifactId>

<version>4.11</version>

</dependency>

<dependency>

<groupId>ch.qos.logback</groupId>

<artifactId>logback-core</artifactId>

</dependency>

<dependency>

<groupId>ch.qos.logback</groupId>

<artifactId>logback-classic</artifactId>

</dependency>

<dependency>

<groupId>ch.qos.logback</groupId>

<artifactId>logback-access</artifactId>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<configuration>

<source>1.8</source>

<target>1.8</target>

</configuration>

</plugin>

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

<configuration>

<executable>true</executable>

<outputDirectory>../packages/</outputDirectory>

</configuration>

</plugin>

</plugins>

</build>

</project>

Running Micro Services

Since these are maven project, using maven install will generates packages in the packages folder under the project root. These applications are Spring Boot applications and so, you can run it directly using java -jar spring-customer-service-0.1.jar and java -jar spring-supplier-service-0.1.jar.

Conclusion

If all the ELK services are running correctly and the microservices running with sending logs, then the logstash terminal will be listed with json strings as shown below

{

"logfiledirectory" => "/var/log",

"level" => "WARN",

"logfile" => "spring-customer-service.log",

"maxHistory" => "60",

"logstashhost" => "192.168.0.104:5043",

"maxFileSize" => "100MB",

"message" => "warn message slf4j",

"maxArchiveSize" => "10GB",

"@timestamp" => 2017-10-16T17:17:14.102Z,

"port" => 15646,

"thread_name" => "main",

"level_value" => 30000,

"productionLogLevel" => "INFO",

"@version" => 1,

"host" => "192.168.0.101",

"stagingLogLevel" => "INFO",

"logger_name" => "org.appfabs.sample.logaggregation.customer.service.CustomerService",

"devLogLevel" => "DEBUG"

}

Now goto kibana and create index pattern using appfabs-logback*. All the logs will be available in this index pattern and you can start analyze your logs using Kibana.

Source Code

You can clone the source directly from github.com using git clone https://github.com/appfabs/appfabs.samples.elk.logaggregation.git or can directly visit https://github.com/appfabs/appfabs.samples.elk.logaggregation.

Contact

Prathapachandran V

Recent Articles

CYINNOV8 - INNOVATIONS IN CYBER SPACE

One day conference on “Innovations in Cyber Space” Cyber Security - Challenges and Innovations October 29, 2018 | DRDO Bhawan, New Delhi

COCON XI CTF (Capture The Flag) By Appfabs...

c0c0n is an annual international cybersecurity, data privacy and hacking conference organised by the International public-private partnership led b...

C0c0n XI - CTF Write-up - The DomeCTF Profile

Category: Web Flag: Write your team name in /tmp/DOMECTF_BASE Points: 250 bonus + 1 point for 5 minutes of securing flag.